“Prediction is very difficult, especially about the future.” – Niels Bohr, Mark Twain, Yogi Berra, or perhaps someone else.

I think the nascent field of learning analytics is facing its first serious, defining issue.

It seems the claims for the effectiveness of Course Signals, Purdue University’s trailblazing learning analytics program, are not quite as robust as it seemed. You can get a quick journalistic overview from Inside Higher Education’s story (which has good links to the sources) or the Times Higher Education’s version (which appears identical, except the useful links have been stripped).

This post is a bit long. As Voltaire, Proust, Pliny or (again) Mark Twain may have said, my apologies, but I didn’t have time to write a short one. It’s important, though.

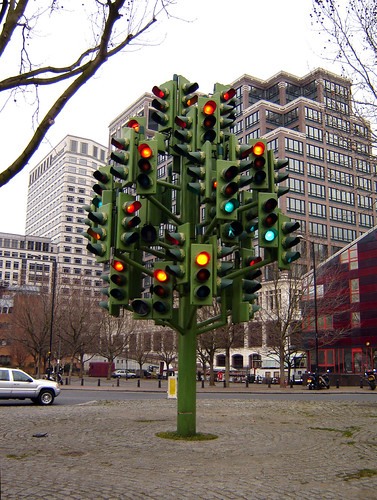

In a nutshell, Course Signals uses a predictive model to give students a red, amber or green ‘traffic light’ to represent the model’s probability that they’ll pass. The tutor runs the model to generate the Signals, and passes those on to students along with personalised advice and direction to sources of support. Not all courses at Purdue adopted Signals at once, and Kimberley Arnold and Matthew Pistilli (disclosure: I’ve met and admire both of them) used this as a ‘natural experiment’ to explore whether there was any effect of having vs not having an impact on retention and grades. They published their findings (freely-downloadable PDF version) in the proceedings of LAK12. This was cited pretty widely – the approach has taken off, and they are unusual in educational interventions in actually having a control group.

Then in August, Mike Caulfield spotted a problem, which he expanded on in late September when he saw a Purdue press release repeating the claim. The Purdue researchers didn’t appear to have controlled for the number of courses taken. He explained it with an analogy: the older you live, the more car accidents you are likely to have had, and so people who have had more car accidents live, on average, longer then people that have had very few car accidents. But of course car accidents don’t cause you to live longer. Similarly, it looks like the impressive figures for CS don’t take account of the effect that students who take more courses (because they’ve been retained) will be more likely to have more courses with CS. Mike Feldstein picked this up, and the extremely capable Al Essa went the distance and coded up a simulation of students being randomly given chocolates on each course they took. Sure enough, the figures he got looked very similar to those in the CS paper: there’s a strong correlation between the number of chocolates the sim-students got and their retention, but chocolates don’t make them study. Mike Feldstein has kept the issue alive over the last few weeks at e-Literate, and Mike Caulfield posted a useful explainer there yesterday, in response to the story hitting the HE press. The comments from Matt Pistilli seem to confirm indirectly that this is a real issue with the analysis.

This issue, and how we handle it, raises all sorts of important questions and challenges – and far more widely than for Purdue. There’s the tensions between mathematical/technical complexity and wide deployment, between research and enterprise, between multidisciplinarity and rigour.

Why does it matter?

Learning analytics is a new field. The Course Signals project is perhaps its best example: it combines big(ish) data, smart maths (the predictive model), and – most important to my mind – an embedding in a social system that makes effective use of it. Even better, and unlike most educational interventions (and certainly many in learning analytics), they had successfully deployed it at scale, had evidence of impact, and they had a control group (albeit not perfect).

I’m not sure I know of any learning analytics project that had better evidence to support it. (There’s several stronger findings in parts of the ITS/EDM field, though.) There are lots of imitators (many of which cost real money) which have no peer-reviewed evidence of impact. I’ve even heard word of predictive analytics projects where retention went down when they were introduced. (Which I think shows the importance of the intervention being well designed: emailing students to say “the computer says you have next to no chance of passing this course” is probably not very motivational.) And now the evidence for that leading project doesn’t look as good as it did.

I’m very, very keen to find out what happens when the Purdue people do run the appropriate analyses to control for this effect.

Is Course Signals any good?

I still think so. I’m moderately confident there is a genuine moderate positive effect to Course Signals. The problem raised seems a real one: Al Essa’s simulation in particular convinces me that the long-term effect on retention is much less than it looked like. But there is a single-course effect, which I suspect is relatively robust. We don’t know for sure yet. I expect “two courses is the magic number” is spurious though.

What should happen next?

I really don’t think this is an issue that can be ignored and left to lie by Purdue.

Ideally, the Purdue researchers should urgently re-analyse their data, taking world-class statistical advice (Purdue has a decent Dept of Stats), and publish the outcome in raw, unreviewed form as fast as possible, and then write it up and submit it for peer review. The latter is likely to take a long time.

The learning analytics field needs to take a very serious look at how we work. This should have been picked up at peer review, or certainly afterwards. Damn it, I should’ve noticed this – I’ve read and cited that paper enough times.

On the other hand, to be fair to us as a community, it’s probably very obvious only with hindsight. And it has been picked up, and it’s coming to more people’s attention – indeed one of my main purposes in posting this is to bring it to more people’s attention.

Peer review is hard, flawed, and massively resource-intensive. An underlying problem is that, outside a tiny handful of journals, it’s all done as a semi-voluntary task by people who get very little benefit from doing so. It’s particularly hard in learning analytics: a really good paper will cover social, technical, pedagogical and statistical issues, and finding people with the expertise and time to review is therefore really hard.

If learning analytics has any merit as a field, it’s in the combination of disciplines. We need to be very careful that we don’t lose rigour in the mix.

This affair also shows up very pointedly the difficulties with the traditional model of publishing, I think – although as ever the medical profession is ahead of us with things like rapid rebuttal and the like.

Data sharing

Mike Feldstein has been keen that the Purdue researchers should release their data so others can verify the analysis. Matt Pistilli raised issues of student anonymity, to which Mike responded:

Course Signals collects substantial fine-grained data about student activity, some of which could be personally identifying. None of which is necessary in order to respond to the questions being raised. In order to test the retention claims, we only need to see gross outcomes, which are easily anonymized. In fact, I would be surprised if Purdue does not already have an anonymized version of the data. But even if they don’t, the amount of effort required to scrub it should be relatively modest and easily proportional to the level of concern being raised here.

I don’t think that’s true. I think he’s right that it’s likely to be only a reasonable amount of work to, say, remove personal identifiers from the data and replace them with a pseudo-randomly generated ID. Whether it’s modest work or not I wouldn’t like to say – it would need someone who knows what they’re doing with databases not to mess this up, and it’s very easy to inadvertently leak data when you do data redaction, so you really need someone else with experience of those sorts of issues to check over what you’re planning to release. It would be irresponsible to release that sort of data hastily. (And outside the US, messing up would risk prosecution under Data Protection legislation.) But even that’s not going to anonymise the data.

De-anonymisation is alarmingly easy. For instance, it’s possible to identify social network users simply from knowing which groups they’re in, and most people can be identified uniquely from their work and home locations even when anonymised to a city-block level. To be able to do this analysis at all, the minimum possible dataset would include information specifying that student X took these courses during these terms and whether they passed, failed or dropped out. I’d be very, very surprised if you can’t identify at least some students from that, and I’d expect you’d be able to get quite a few matches from very fuzzy data.

This is a big issue for the field. Best practice in science is certainly trending towards data publication. It’d be great if we could follow that. But learning analytics data is almost always going to have this sort of anonymisation problem. Even worse, it’s also almost always going to hit commercial (or quasi-commercial) sensitivities. Data publication is going to become a norm much faster in fields where the majority of the money comes from funders who have a primary interest in research. Here, most of the funding comes from institutions, companies and foundations who see their money as a way of improving student learning and only secondarily care about the research output, if at all. It would, of course, be nice to change that attitude, but it’s not going to happen fast and it’s not something we can do on our own.

Testing is hard

Another fundamental problem is that testing at all is hard.

Almost nobody wants to do proper testing of educational interventions. It’s hard work to do at all, and very hard to do well. And at the moment it’s an almost impossibly hard sell to senior managers – and if you can’t get their buy in, you’re not going to get the scale that you need.

Really robust testing means, to my mind, randomised controlled trials (RCTs), and preferably as blinded as possible.

Arguing the case for this is beyond the scope of this small post (I started, but realised where it was going). Suffice to say that the medical research community is way ahead of us here, and we can pick up their good practice. We might worry that it’s unethical to deny someone something we think will help them, but although education is important, it’s rarely a literal question of life or death – at least, not immediately. The medics have even worked out how to do the sums so you can stop a trial early as soon as you have gathered enough evidence.

It’s not as simple as some people make out. Again, that’s a long post (possibly an article) in itself, but suffice to say that in medicine, everyone can agree that if your intervention dramatically improves all-cause mortality then it’s a good thing. In education, we don’t have that sort of robust metric, and interventions that dramatically improve grades tend to raise suspicion of falling standards (often rightly). And higher education has its own particular challenges.

But until we start doing a lot more of this sort of thing, we’re going to have a lot of interventions that have a lot less evidence to support them than Course Signals. And that of necessity means we’re doing a lot of things that are not just not helpful but actively harmful.

Correlation and causation

This is the central problem we have here, and it’s famously hard. Al Essa linked it to the Euthyphro dilemma (aka the Good vs God question: Does God command the Good because it’s good, or is it good because it’s commanded by God?) so it’s been known since 400 BCE.

XKCD is fun on the topic:

And so is what I think of as the wonderful “Did Avas cause the US housing bubble?” infographic from Businessweek.

What the correct sums are to do in this instance aren’t instantly obvious to me. Several people have made suggestions, and they’d all be better than what was done in the original paper, but none of them seem as statistically sophisticated as I think is called for here to properly answer the question “does CS cause improvement in retention” clearly. Something like partial correlations controlling for number of courses taken might do it. But even there I worry, because pass/fail/dropout is not remotely a continuous variable and I’m not at all certain that retention rates for courses are distributed with Gaussian/normal errors.

The Right Answer probably gets terribly Bayesian and in to the theory of causality inference pioneered by Judea Pearl. (Basic outline here.) But this is a live research area in itself, and it’s not easy to apply. (I was fairly sure I hadn’t got to grips with it myself, and I was able to verify this by trying and failing to apply it here – though admittedly on a tight time budget.)

… though on reflection this is perhaps me over-correcting, and the chi-squared analyses from the original paper but simply done with corrected denominators should be good enough to be going on with.

Percentage pedant points

One small thing I would urge everyone in this debate to pay attention to is the distinction between percentages and percentage points. Many people have confused these, and it’s an easy slip to make, so I won’t call out examples. (Not least because I’m sure I’ve made minor errors in this post.) But it’s sometimes not clear whether people have or haven’t, which makes redoing their sums hard.

Let me spell it out. An increase of X% is not the same as an increase of X percentage points. For instance, if students who get chocolates have a 95% retention rate, but students who didn’t have only an 85% retention rate, that’s an improvement of 10 percentage points. An improvement of 10% in the retention rate from 85% would be 93.5% (85% x 1.1).

Ethics

One underlying finding that nobody’s even questioned (yet!) is that we can predict student success to a certain degree. I’m really pretty sure that we can – and to a surprisingly high degree of confidence. (Though of course far, far from certainty.)

What do you do about that information?

This is a really hard question, and there is no good answer to it – or at least, no simple answer that doesn’t have serious drawbacks. Targeting some support to students who appear to be at risk seems entirely sensible. But what if we know that this support has a very low chance of working? (It looks like our poster-candidate for a good intervention here is not as good as we thought.) How much effort should we spend on this? Is it fair to spend much more resource on students where there is little chance of making a difference than on those where there’s a better chance? In the limit it can’t be right for a university to spend all their time supporting no-hoper students and none challenging and stretching the brightest and best. Do we refuse to take on students who have low predicted success? That will almost certainly reinforce existing structural disadvantage. For my university – the Open University – I think it’s clear that we can’t shut our doors: that’s part of the whole point of us as an institution. Other universities with different aspirations might not see this as a problem at all.

There’s no answers that are wholly good, but there is at least one answer that I think is definitely bad, and that is to not look at prediction data at all and pretend it doesn’t exist. This is a very understandable reaction to a hard problem, and it is of course very widespread. But it’s not, I don’t think, a moral answer. I also don’t think it’s an answer that you can rely on to keep you out of court: the central principle of negligence is that people must act to avoid causing “reasonably foreseeable’ harm, or compensation is due.

Final points

This is very much Interesting Times for analytics in higher education. I certainly want to help us improve as a community. If we can’t get data analysis right, who in higher education is going to?

Kudos to Mike Caulfield, Mike Feldstein and Al Essa for raising this problem and working on it. And good luck and sympathy to Matt Pistilli.

–

This work by Doug Clow is copyright but licensed under a Creative Commons BY Licence.

No further permission needed to reuse or remix (with attribution), but it’s nice to be notified if you do use it.

Thanks, Doug. This is a superb addition to the conversation, and I appreciate your informed counterpoint on the issue of anonymization and release of the raw data. One correction, though: The explainer published on e-Literate was written by Mike Caulfield. We are delighted to have him as a periodic featured blogger now. Unfortunately, misattribution of credit is a fairly common problem on a group blog like ours (and I tend to be the beneficiary of the misattribution). We are trying to find ways to make post authorship more obvious.

Anyway, great stuff.

Thanks for the remarks, and thanks again for your work bringing this to light.

And thanks for the correction! Sorry about that – and sorry particularly to Mike Caulfield. I’ve edited the post to reflect the attribution correctly.

(For the record, my original post said erroneously that Mike Feldstein had written the explainer on e-Literate.)

Thanks for a very thorough post on the topic, Doug. I continue to appreciate the passion with which we scrutinize outcomes, all in the name of furthering the state of the art. I want to make sure that the pendulum doesn’t swing too far to one side, though, and scare off or delay projects that are in the works.

As with most things, there’s a balance. On one side, you might have a model that was created with little statistical validity, rolled out hastily, and then a PR person jumps on it to proclaim success in the Big Data world. On the other side, you might see a dedicated team who performs thorough research and testing, but the price is that it is still in beta after 5 years and only 200 students have been exposed to the tool. I don’t think the bar should be held so high that most projects fall into the latter case. As long as the data are good and IRB processes are followed properly, I think the space is collaborative enough that all will benefit.

I tend to look at Course Signals as the catalyst that spurred the wave of higher ed analytics work in the last 5 years (APUS, Rio Salado, PAR Framework, Maryland…). It certainly inspired the work I did when I worked at University of Phoenix (http://bluecanarydata.com/evidence…full disclosure…I recently started my own analytics company so I am biased towards more institutions attempting to implement analytics solutions). Kudos to John Campbell and Matt Pistilli for implementing a solution and allowing us to have this conversation. I look forward to many more such examples.

—

Mike Sharkey

President, Blue Canary

mike@bluecanarydata.com

@mjshark

Doug — this is a great post. And I like the fact it contains everything from percent vs. percent gain warnings to explanations about the difficulties of anonymization.

I had a rant that I wrote but didn’t publish a while back, and the basic point was that much of the issue is too many critics of the analytics literature fall into the “all data is bogus” camp. Normally in a discipline you could expect the critics of an approach to go after the methods/conclusion and scrutinize it. What tends to happen here is the critics don’t engage with the quantitative element at all.

Even now it depresses me that so many people interpret the raising of this issue as a point for the “Analytics is bogus” camp. As you point out, the whole reason we are able to spot the faults here is that Purdue went through the effort to design the sort of study we’d like to see more of. That’s not the behavior of a bad actor, that’s what we want.

As learning analytics evolves, more pro-analytics camps will emerge, differentiate themselves, and hopefully provide the sorts of statistically literate challenges we need. Until then, we’re vulnerable to our own biases more than we might otherwise be.

Thanks Mike – and thanks again for picking this up.

I’m right with you that Purdue are good guys who made a slip, not bad ones.

I do want to note, though that their study, although far better than most, wasn’t ideal: my understanding is that the control group arose not out of a desire to do a high-quality randomly-controlled trial (though I suspect the researchers would’ve preferred that!), but out of the fact that not all courses adopted Course Signals at the same time. I don’t know the details, but my understanding from other publications and conversations is that it was exactly the sort of complex, negotiated and sometimes contested process you’d expect in trying to introduce teaching innovation in a top-class research university. Persuading people to also engage in a randomly-controlled trial would make it even harder to get it taken up – and might well have made the CS sink without trace. Re-reading the paper, I’m not sure this is spelled out, which it should be. Because, of course, this raises the possibility of other confounders in the data. It seems quite possible that courses that were early adopters of CS were more active, engaging faculty who were already motivated to improve retention.

Fair enough, and I do think we need to have a conversation as a community about how to handle these challenges creatively and with better transparency. But let’s not lose sight of the fact that the flaw that has (tentatively) been discovered is not with the experimental design but with the data analysis. Further, that flaw was uncovered by a non-statistician who happens to have good intuitions about data. Peer review should have caught this problem.

I don’t know if this is helpful at all but we did develop a predictive model in the OU back in 2002 using logistic regression methods which was surprisingly accurate (we didn’t have the wit to call it learning analytics). We used it to divide our students into experimental and control groups with the same average prediction of success. We then made proactive motivational contact with the experimental group and got an average of 5% points increase in retention over 4 years.

The original paper is Open Learning 21 (2) June 2006 pp125-138 or is on my website

Ormond Simpson

Thanks Ormond, that is very helpful. And I certainly don’t think you need feel witless for not using the term learning analytics a decade before it became a trendy buzzword.

I did know about this work already. In fact I’m moderately sure I’ve talked to you about it in the past. I’ve certainly talked to Alan and Alison about it, and tried to persuade people to do more to embed it as an approach wherever I could. I hadn’t seen this paper, though, so thank you particularly for that.

You might like to know that we have a brand new individual predictive model – created in the last few months – again based on logistic regression, but using more data than was available then. (Student characteristics and behaviour have also changed.)

At the moment it’s being used for planning purposes: it’s pretty accurate at an individual level, but it’s excellent (to the point of creepy) when you aggregate it to e.g. module, programme, qualification or faculty level, and individual predictions let you aggregate in whatever way you like.

Based on your experiences, the Signals stuff, and other evidence, I’m lobbying to use it to drive targeted support, mediated by the tutor. This isn’t a small job, though!

Thanks for those kind words Doug. It’s good to know that this work is being developed along the lines you describe. And thanks for pushing along the idea that OU tutors might use it.

But as an OU tutor myself (still after 39 years) I’m constantly infuriated that the OU can’t even do simple things for tutors like for instance providing mail and email merge, text messaging systems from a pc, automatic warnings about students missing web sign-in schedules, statistically analysed benchmarked retention feedback etc etc so I’m not getting too hopeful.

PS Paul Prinsloo at UNISA used a predictive system and gave his students the results. He found they did better than expected….